- Video service monitoring

- Automated testing and monitoring

- QA test automation

Why QOS video monitoring fails to reflect real user experience at scale

By Yann Caron, Chief Sales Officer

Video quality monitoring has become a standard operational pillar for streaming platforms, broadcasters, and app publishers. Dashboards are full. Metrics are flowing. KPIs are green.

And yet, viewers still complain.

Buffering during ads, frozen screens on Smart TVs, audio out of sync, black screens after app updates, or navigation paths that simply fail. These issues often occur while traditional monitoring reports show “normal” performance.

The problem is not a lack of data.

It is a disconnect between what is measured and what users actually experience on real devices.

The illusion of visibility created by traditional monitoring solutions

Most video quality monitoring solutions rely on indirect indicators of performance. They measure what is technically observable from the network, the player, or the backend infrastructure.

Commonly tracked metrics include:

- Bitrate and throughput

- Startup time

- Error rates

- CDN availability

- Player logs

- QoS indicators derived from network data

These metrics are valuable. But they are proxies, not observations.

They describe how a stream should behave under normal conditions. They do not confirm what actually happens on screen, in a living room, on a Smart TV, under real network constraints, with real UI states and real user interactions.

A stream may start successfully, report normal bitrate, and show no errors in monitoring tools, while the viewer is stuck on a black screen with no way to recover.

As a result, monitoring often answers the wrong question:

“Is the system behaving as expected?”

instead of

“Is the user able to watch content correctly?”

Why user experience cannot be inferred reliably from QoS metrics alone

User experience is not a single variable. It unfolds on a screen, through an interface, on a physical device, and depends on multiple layers interacting in real time.

User experience depends on UI behavior, device software, remote control handling, ad logic, network variability, and content-specific conditions.

Many critical failures occur after the stream technically starts:

- Playback launches, but the screen remains black

- Video plays, but the interface becomes unresponsive

- Ads load, but never exit

- The app resumes incorrectly after standby

- Focus is lost in the UI, blocking navigation

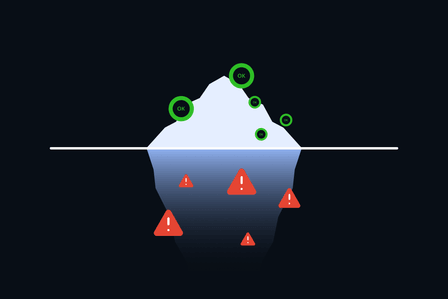

From a metrics perspective, the stream exists.

From a user perspective, the service is broken.

This is where traditional QoS monitoring reaches its limits.

Scale makes the problem worse, not better

At small scale, teams can manually validate issues, reproduce bugs, or rely on user feedback.

At scale, this breaks down.

Modern video services must support:

- Dozens of device types

- Multiple OS versions

- Frequent app releases

- Personalized user journeys

- Regional network conditions

- Live, VOD, FAST, and ad-supported content

In this context, aggregated metrics smooth out real failures. Rare but impactful issues disappear into averages. Device-specific bugs are diluted across populations. UI regressions go unnoticed until churn increases.

The more scale you add, the further metrics drift from reality.

The blind spot: what happens on real devices, on screen

The missing piece in most monitoring strategies is simple:

direct observation of real user journeys on real devices.

Only real-device monitoring can confirm:

- What is actually displayed on screen

- Whether playback progresses visually

- If ads really appear and exit properly

- If navigation flows succeed end to end

- If recovery mechanisms work after failures

This is not about simulating behavior.

It is about watching the same screen the viewer sees, at scale, automatically.

Why “experience monitoring” often still falls short

Many tools now claim to monitor user experience. In practice, this often means:

- Player-side instrumentation

- Synthetic sessions without UI interaction

- Emulated environments

- Partial device coverage

These approaches still assume that experience can be inferred.

Anything less reintroduces blind spots.

A different approach: observing experience, not estimating it

To reflect real user experience at scale, monitoring must evolve from inference to observation.

This means:

- Running automated scenarios on real devices

- Navigating the app as a user would

- Validating what appears on screen

- Measuring performance in context, not isolation

- Correlating KPIs with visual proof

This approach does not replace metrics.

It grounds them in reality.

When a KPI degrades, teams can see why.

When a metric looks fine, teams can verify that the experience truly is.

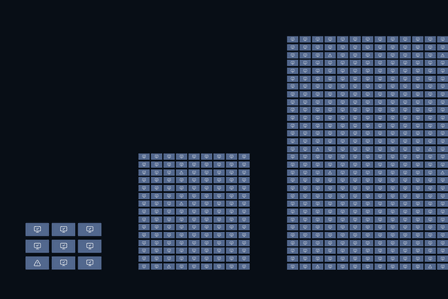

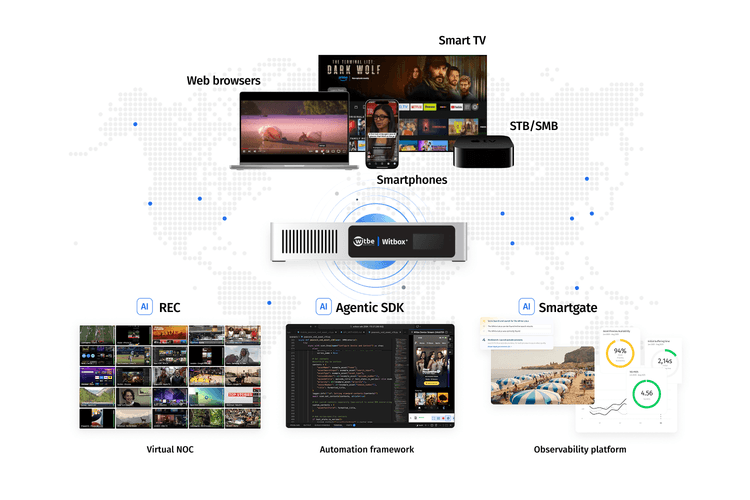

How Witbe addresses this gap

Closing this gap requires more than adding new metrics. It requires changing how monitoring is done.

Witbe was built around a simple principle:

you cannot guarantee video quality without observing it on real devices.

Witbe’s monitoring approach combines:

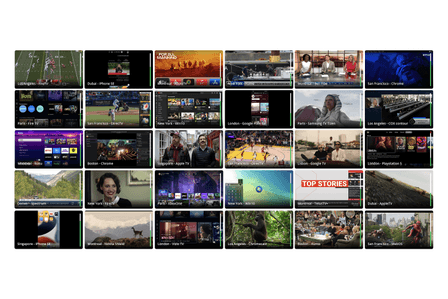

- Physical devices deployed worldwide

- Automated end-to-end user journeys

- On-screen validation and video recordings

- Deterministic KPI measurement

- Scalable execution across devices and regions

This allows operators to monitor not just whether a stream exists, but whether the service actually works for viewers.

Conclusion: QoS metrics do not equal experience (QoE)

Video quality monitoring is essential.

But QoS metrics alone do not reflect real user experience at scale.

As streaming ecosystems grow more complex, the gap between measured performance and lived experience widens. Closing that gap requires a shift in mindset: from estimating experience to observing it directly.

The future of video quality monitoring is not about more data.

It is about seeing, at scale, what viewers actually see.